Quantifying Software Reliability via Model-Counting

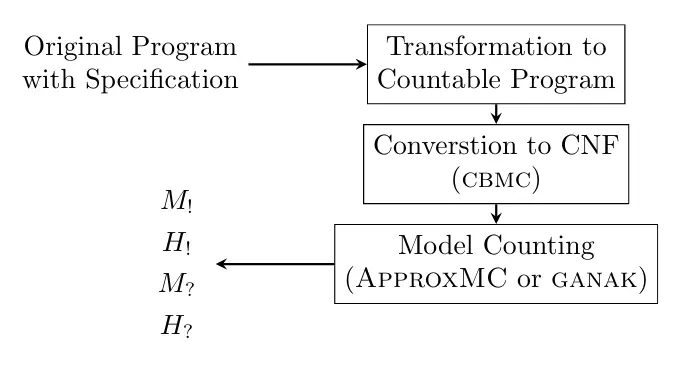

A pipeline for software quantification

A pipeline for software quantification

Critical software should be verified. But how to handle the situation when a proof for the functional correctness could not be established? In this case, an assessment of the software is required to estimate the risk of using the software.

In this paper, we contribute to the assessment of critical software with a formal approach to measure the reliability of the software against its functional specification. We support bounded C-programs precisely where the functional specification is given as assumptions and assertions within the source code. We count and categorize the various program runs to compute the reliability as the ratio of failing program runs (violating an assertion) to all terminating runs. Our approach consists of a preparing program translation, the reduction of C-program into SAT instances via software-bounded model-checker (CBMC), and precise or approximate model-counting providing a reliable assessment. We evaluate our prototype implementation on over 24 examples with different model-counters. We show the feasibility of our pipeline and benefits against competitors.