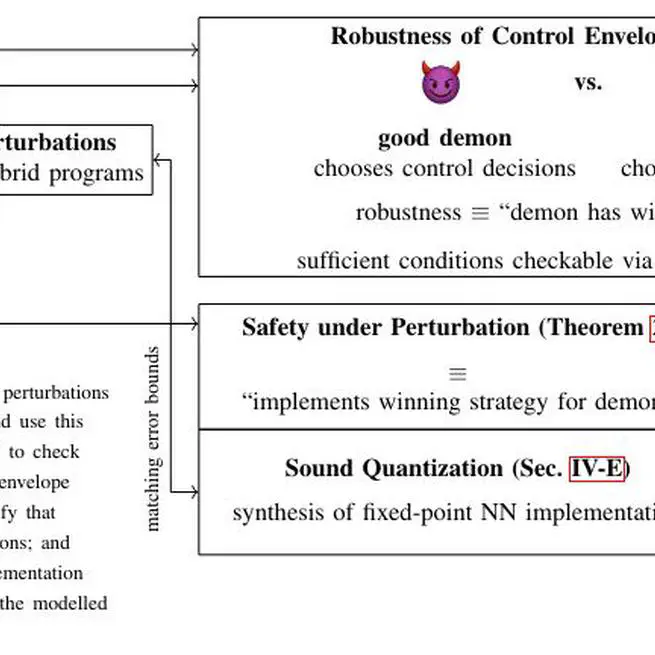

We extend the differential dynamic logic-based NN verification technique VerSAILLE (NeurIPS'24) to support verification under perturbations and leverage this result to derive end-to-end safety guarantees that carry over to fixed-point arithmetic implementations of (originally) real-valued NNs.

6. Oct 2025

We apply differential dynamic (refinement) logic to a case study on neural highway control. After modelling the abstract system we use VerSAILLE and the NCubeV tool [NeurIPS24] to (dis)prove the safety of concrete neural networks. Along the way we uncover numerous interesting results on the highway-env simulation environment including inconsistencies between the provided specification and the actual simulation.

10. Jun 2025

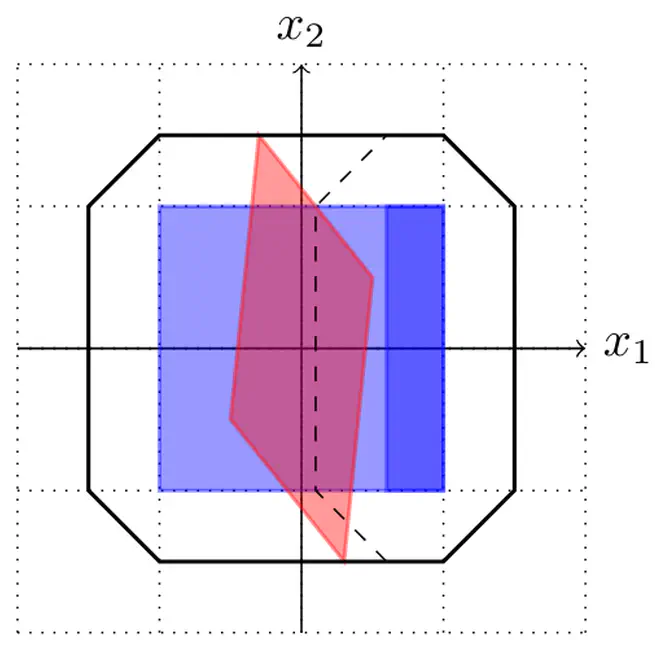

We introduce a new abstract domain for differential verification using Zonotopes and explore which equivalence properties are ammenable to differential verification. Furthermore, we propose an improved approximation for confidence-based verification of NNs with softmax output.

1. May 2025

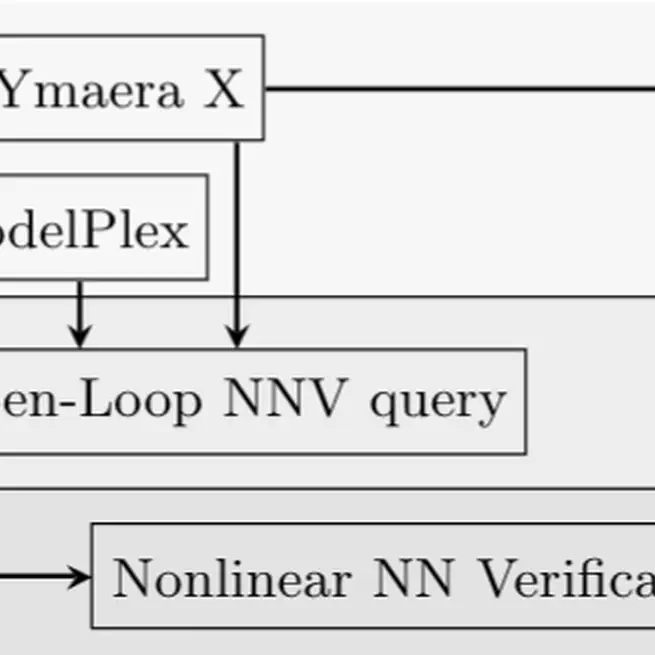

We present the first approach for the combination of differential dynamic logic (dL) and NN verification. By joining forces, we can exploit the efficiency of NN verification tools while retaining the rigor of dL. This yields infinite-time horizon safety guarantees for neural network control systems.

10. Dec 2024

We evaluate the potential of Large Language Models (specifically GPT 3.5 and GPT 4o) for the generation of code annotations in the Java Modelling Language using a prototypical integration of the Java verification tools KeY and JJBMC with OpenAI's API.

27. Oct 2024

An exploration of the relationships between qualitative and quantitative information flow and various causal and non-causal fairness definitions with applications to program analysis.

20. Feb 2024

A formal approach for the quantiative assessment of service-oriented software which combines high-level software architecture modelling with deductive verification.

8. Jan 2024

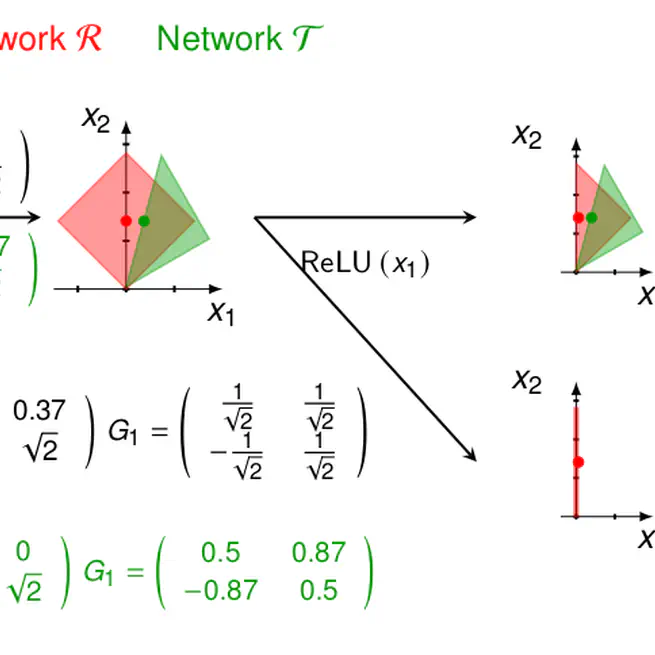

We present and evaluate an approach for proving equivalence properties on neural networks and show that the verification of $\epsilon$-equivalence is coNP-complete

21. Dec 2021

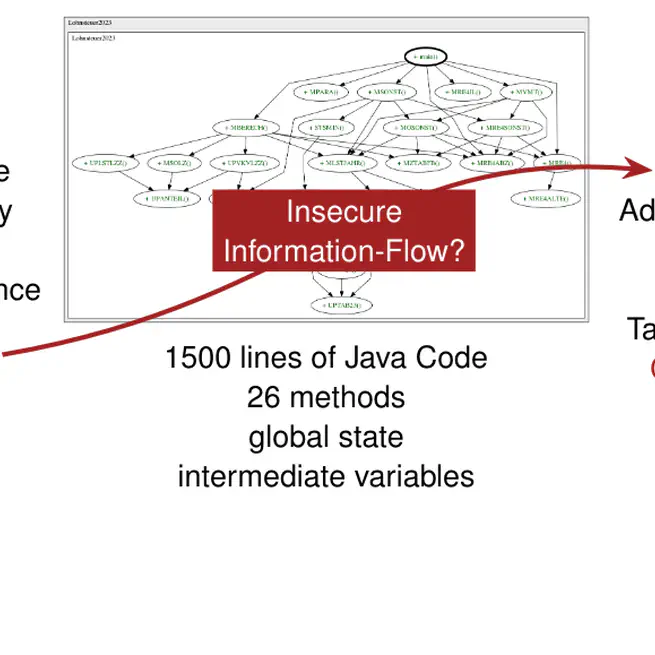

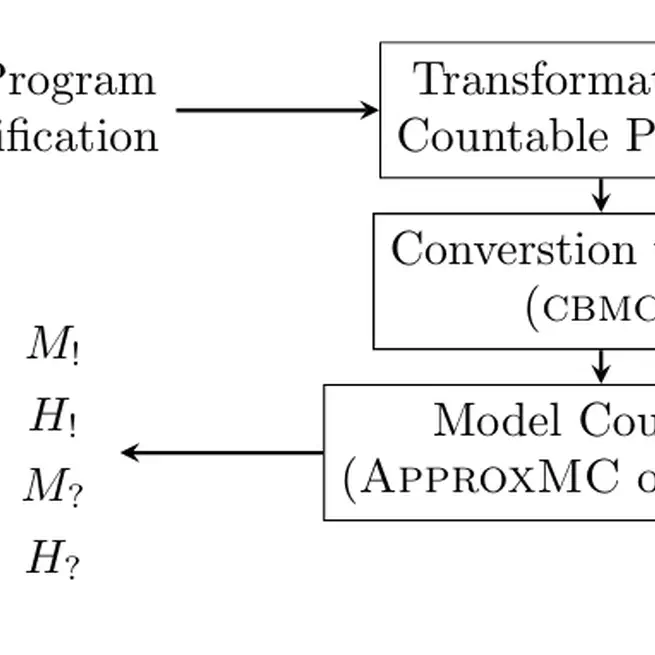

We present and evaluate a pipeline allowing for the quantification of C-programs according to their specification adherence.

23. Aug 2021